The Math Behind Machine Learning

Starting with Linear Algebra

What is Linear Algebra?

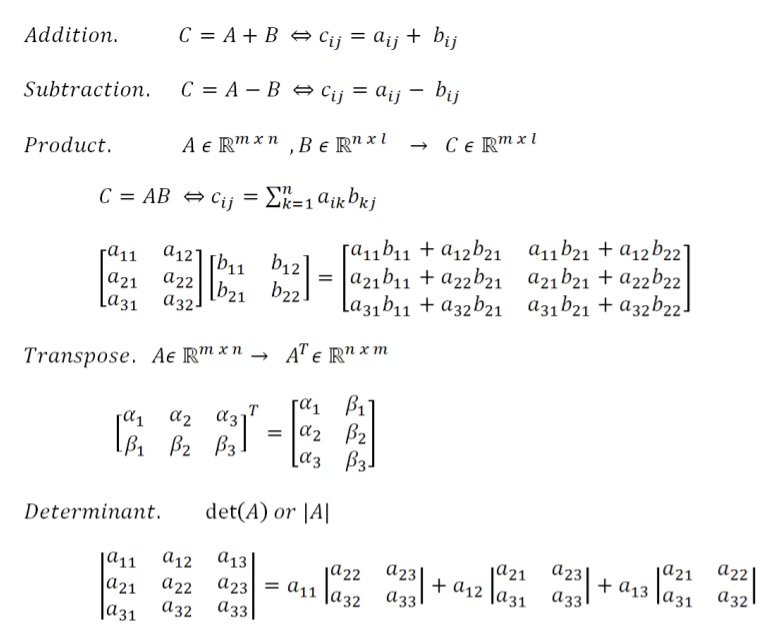

Linear Algebra is an extremely important topic in the fields of physics, math, and computer science. In machine learning, Linear Algebra is used to pass information from layer to layer. Essentially, we are able to take groups of numbers, apply transformations to them, and pass them off to have the process restarted.

Where do we start?

The best place to start in Linear Algebra are with scalars and vectors. Scalars are the numbers that are applying transformations. Vectors have magnitude and direction and are therefore made of multiple components. To signify each individual component, symbols such as î and ĵ are used. By doing this, we can project scalars onto vectors.

Where does this topic fit into MLM?

When dealing with neural networks, the first step is to initialize each layer. When this process happens, a matrix of weights are also initialized. In order to for the model to ‘learn’, the weights need to be transformed by scalars and vectors over time. By doing this, the model can optimize and produce better outputs.

How I learned

In order to understand Linear Algebra, I watched many videos and read various articles. The main source of my education thus far on the topic has been from the YouTube channel “3Blue1Brown”. On the channel is a ~20 video long series teaching the inner workings of almost everything in linear algebra.

Understanding How the Model Learns

The overarching idea of training the model is through the use of Gradient Descent. Image you are blindfolded on a mountain and you have to find your way to the bottom. Would you try to go in the direction that feels to increase in slope or decrease? This is what a neural network is doing when it trains. It takes a derivative of the loss it experienced with respect to some parameter and then adjusts that parameter to take a ‘step’ towards the lowest point.